„With a few decades, autonomous and semi-autonomous machines will be found throughout Earth’s environments, from homes and gardens to parks and farms and so-called working landscapes – everywhere, really, that humans are found, and perhaps even places we’re not. And while much attention is given to how those machines will interact with people, far less is paid to their impacts on animals.“ (Anthropocene, October 10, 2018) „Machines can disturb, frighten, injure, and kill animals,“ says Oliver Bendel, an information systems professor at the University of Applied Sciences and Arts Northwestern Switzerland, according to the magazine. „Animal-friendly machines are needed.“ (Anthropocene, October 10, 2018) In the article „Will smart machines be kind to animals?“ the magazine Anthropocene deals with animal-friendly machines and introduces the work of the scientist. It is based on his paper „Towards animal-friendly machines“ (Paladyn) and an interview conducted by journalist Brandon Keim with Oliver Bendel. More via www.anthropocenemagazine.org/2018/10/animal-friendly-ai/.

Maschinenethik und Philosophie

Im „Handbuch Maschinenethik“, herausgegeben von Oliver Bendel, ist Anfang Juli 2018 ein Beitrag von Catrin Misselhorn erschienen, mit dem Titel „Maschinenethik und Philosophie“. Die Zusammenfassung: „Die Maschinenethik ist ein Forschungsgebiet an der Schnittstelle von Philosophie und Informatik. Dieser Beitrag beschäftigt sich zum einen mit den philosophischen Grundbegriffen und Voraussetzungen der Maschinenethik. Diese sind von besonderer Bedeutung, da sie Fragen aufwerfen, die die Möglichkeit der Maschinenethik teilweise grundsätzlich in Zweifel ziehen. Zum zweiten werden die verschiedenen Rollen der Philosophie auf unterschiedlichen Ebenen innerhalb der Maschinenethik thematisiert und die methodologische Umsetzung dieses interdisziplinären Forschungsprogramms dargelegt.“ (Website Springer) Eine Übersicht über die Beiträge, die laufend elektronisch veröffentlicht werden, findet sich über link.springer.com/referencework/10.1007/978-3-658-17484-2 … Das gedruckte Buch kommt in wenigen Monaten heraus.

Abb.: Die Maschinenethik gestaltet Maschinen mit

International Workshop on Ethics and AI

The international workshop „Understanding AI & Us“ will take place in Berlin (Alexander von Humboldt Institute for Internet and Society) on 30 June 2018. It is hosted by Joanna Bryson (MIT), Janina Loh (University of Vienna), Stefan Ullrich (Weizenbaum Institute Berlin) and Christian Djeffal (IoT and Government, Berlin). Birgit Beck, Oliver Bendel and Pak-Hang Wong are invited to the panel on the ethical challenges of artificial intelligence. The aim of the workshop is to bring together experts from the field of research reflecting on AI. The event is funded by the Volkswagen Foundation (VolkswagenStiftung). The project „Understanding AI & Us“ furthers and deepens the understanding of artificial intelligence (AI) in an interdisciplinary way. „This is done in order to improve the ways in which AI-systems are invented, designed, developed, and criticised.“ (Invitation letter) „In order to achieve this, we form a group that merges different abilities, competences and methods. The aim is to provide space for innovative and out-of-the-box-thinking that would be difficult to pursue in ordinary academic discourse in our respective disciplines. We are seeking ways to merge different disciplinary epistemological standpoints in order to increase our understanding of the development of AI and its impact upon society.“ (Invitation letter)

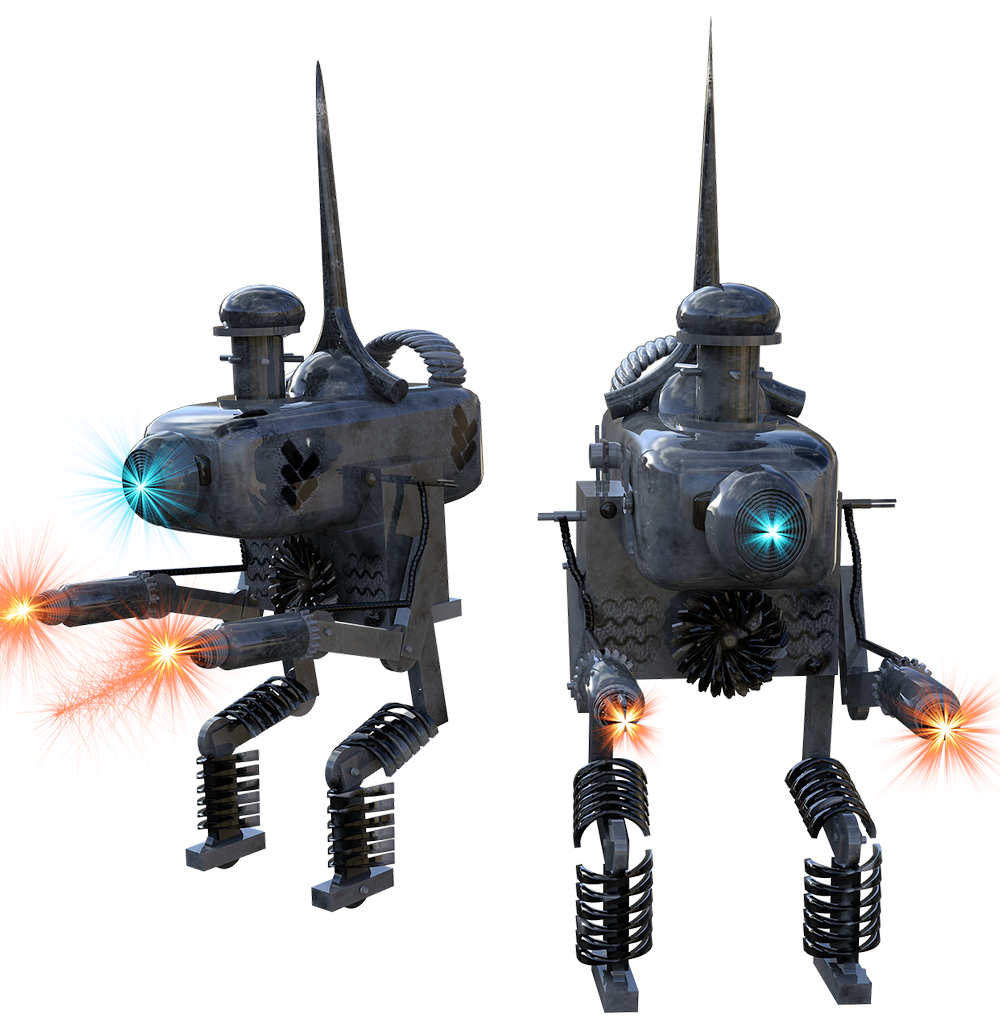

Fig.: Combat robots could also be an issue

Machine Ethics and Artificial Intelligence

The young discipline of machine ethics refers to the morality of semi-autonomous and autonomous machines, robots, bots or software systems. They become special moral agents, and depending on their behavior, we can call them moral or immoral machines. They decide and act in situations where they are left to their own devices, either by following pre-defined rules or by comparing their current situations to case models, or as machines capable of learning and deriving rules. Moral machines have been known for some years, at least as simulations and prototypes. Machine ethics works closely with artificial intelligence and robotics. The term of machine morality can be used similarly to the term of artificial intelligence. Oliver Bendel has developed a graphic that illustrates the relationship between machine ethics and artificial intelligence. He presented it at conferences at Stanford University (AAAI Spring Symposia), in Fort Lauderdale (ISAIM) and Vienna (Robophilosophy) in 2018.

Fig.: The terms of machine ethics and artificial intelligence

AAAI Spring Symposium on AI and Society

The tentative schedule of AAAI 2018 Spring Symposium on AI and Society at Stanford University (26 – 28 March 2018) has been published. On Tuesday Emma Brunskill from Stanford University, Philip C. Jackson („Toward Beneficial Human-Level AI … and Beyond“) and Andrew Williams („The Potential Social Impact of the Artificial Intelligence Divide“) will give a lecture. Oliver Bendel will have two talks, one on „The Uncanny Return of Physiognomy“ and one on „From GOODBOT to BESTBOT“. From the description on the website: „Artificial Intelligence has become a major player in today’s society and that has inevitably generated a proliferation of thoughts and sentiments on several of the related issues. Many, for example, have felt the need to voice, in different ways and through different channels, their concerns on: possible undesirable outcomes caused by artificial agents, the morality of their use in specific sectors, such as the military, and the impact they will have on the labor market. The goal of this symposium is to gather a diverse group of researchers from many disciplines and to ignite a scientific discussion on this topic.“

Fig.: The symposium is about AI and society

Robophilosophy

„Robophilosophy 2018 – Envisioning Robots In Society: Politics, Power, And Public Space“ is the third event in the Robophilosophy Conference Series which focusses on robophilosophy, a new field of interdisciplinary applied research in philosophy, robotics, artificial intelligence and other disciplines. The main organizers are Prof. Dr. Mark Coeckelbergh, Dr. Janina Loh and Michael Funk. Plenary speakers are Joanna Bryson (Department of Computer Science, University of Bath, UK), Hiroshi Ishiguro (Intelligent Robotics Laboratory, Osaka University, Japan), Guy Standing (Basic Income Earth Network and School of Oriental and African Studies, University of London, UK), Catelijne Muller (Rapporteur on Artificial Intelligence, European Economic and Social Committee), Robert Trappl (Head of the Austrian Research Institute for Artificial Intelligence, Austria), Simon Penny (Department of Art, University of California, Irvine), Raja Chatila (IEEE Global Initiative for Ethical Considerations in AI and Automated Systems, Institute of Intelligent Systems and Robotics, Pierre and Marie Curie University, Paris, France), Josef Weidenholzer (Member of the European Parliament, domains of automation and digitization) and Oliver Bendel (Institute for Information Systems, FHNW University of Applied Sciences and Arts Northwestern Switzerland). The conference will take place from 14 to 17 February 2018 in Vienna. More information via conferences.au.dk/robo-philosophy/.

Fig.: Robophilosophy in Vienna

Conference on AI, Ethics, and Society

AAAI announced the launch of the AAAI/ACM Conference on AI, Ethics, and Society, to be co-located with AAAI-18, February 2-3, 2018 in New Orleans. The Call for Papers is available at www.aies-conference.com (link no longer valid). October 31 is the deadline for submissions. „As AI is becoming more pervasive in our life, its impact on society is more significant and concerns and issues are raised regarding aspects such as value alignment, data bias and data policy, regulations, and workforce displacement. Only a multi-disciplinary and multi-stakeholder effort can find the best ways to address these concerns, including experts of various disciplines, such as AI, computer science, ethics, philosophy, economics, sociology, psychology, law, history, and politics.“ (AAAI information) The new conference complements and expands the classical AAAI Spring Symposia at Stanford University (including symposia like „AI for Social Good“ in 2017 or „AI and Society: Ethics, Safety and Trustworthiness in Intelligent Agents“ in 2018).

Fig.: AI and ethics could help society

Robophilosophy 2018

The conference „Robophilosophy 2018 – Envisioning Robots In Society: Politics, Power, And Public Space“ will take place in Vienna (February 14 – 17, 2018). According to the website, it has three main aims; it shall present interdisciplinary humanities research „in and on social robotics that can inform policy making and political agendas, critically and constructively“, investigate „how academia and the private sector can work hand in hand to assess benefits and risks of future production formats and employment conditions“ and explore how research in the humanities, including art and art research, in the social and human sciences, „can contribute to imagining and envisioning the potentials of future social interactions in the public space“ (Website Robophilosophy). Plenary speakers are Joanna Bryson (Department of Computer Science, University of Bath, UK), Alan Winfield (FET – Engineering, Design and Mathematics, University of the West of England, UK) and Catelijne Muller (Rapporteur on Artificial Intelligence, European Economic and Social Committee). Deadline for submission of abstracts for papers and posters is October 31. More information via conferences.au.dk/robo-philosophy/.

Fig.: Reflexions on robots

Liebe und Sex mit Robotern

Die Konferenz im Dezember 2016 an der University of London (Goldsmiths) mit dem Titel „Love and Sex with Robots“ hat ein enormes internationales Echo ausgelöst. Insbesondere in den englischen und amerikanischen Boulevardmedien wurden Aussagen der Referenten verdreht und verfälscht. Was sie wirklich gesagt und gemeint haben, lässt sich nun schwarz auf weiß nachlesen. Ende April 2017 ist das Buch „Love and Sex with Robots“ bei Springer herausgekommen, in der Reihe „Lecture Notes in Artificial Intelligence“. Aus dem Klappentext: „This book constitutes the refereed proceedings of the Second International Conference on Love and Sex with Robots 2016 in December 2016, in London, UK. The 12 revised papers presented together with 1 keynote were carefully reviewed and selected from a total of 38 submissions. … The topics of the conferences were as follows: robot emotions, humanoid robots, clone robots, entertainment robots, robot personalities, teledildonics, intelligent electronic sex hardware, gender approaches, affective approaches, psychological approaches, sociological approaches, roboethics, and philosophical approaches.“ (Klappentext) Beiträge stammen u.a. von David Levy, Emma Yann Zhang und Oliver Bendel. Das Buch kann hier bestellt werden.

We love AI

„We love AI“ nennt sich ein neues Angebot der Deutschen Telekom im World Wide Web, das sich ganz der Künstlichen Intelligenz widmet. Jan Hofmann begrüßt die Besucher: „Wir sind überzeugt, dass künstliche Intelligenz nicht nur den Kundenservice verändern wird, sondern unsere gesamte Industrie – jede Industrie, unsere Gesellschaft und unser tägliches Leben.“ (Website We love AI) Eines der ersten Interviews wurde mit dem Informations- und Maschinenethiker Oliver Bendel geführt. Er untersucht die Möglichkeit maschineller Moral und baut einfache moralische Maschinen als Artefakte, die er dann wiederum untersucht. Zu seinem neuesten Projekt innerhalb der Maschinenethik, das einen tierfreundlichen Saugroboter namens LADYBIRD zum Ziel hat, sagt er: „Mir geht es um das Prinzip. Mir geht es darum, zu zeigen, dass solche tierfreundlichen Maschinen möglich sind. Dass man zum Beispiel Entscheidungsbäume benutzen kann, um solche Maschinen zu bauen. In diese Entscheidungsbäume kann man moralische Begründungen einbeziehen. Das mit Ladybird ist ein sehr anschauliches Beispiel. Wir bauen eine Maschine, die bestimmte Farbsensoren hat, die über Muster- und Bilderkennung verfügt und tatsächlich den Marienkäfer erkennt und verschont. Ich will das Prinzip verdeutlichen: Es ist möglich, eine Maschine zu bauen, die bestimmten ethischen Modellen und moralischen Regeln folgt.“ Das ganze Interview kann über www.we-love.ai/de/blog/post/Interview-OliverBendel.html aufgerufen werden.

Abb.: Der Saugroboter erkennt Marienkäfer

AI for Social Good II

Das AAAI Spring Symposium „AI for Social Good“ wurde am 27. März 2017 nach der Kaffeepause um 11.00 Uhr fortgeführt. Eric Rice (USC School of Social Work) und Sharad Goel (Stanford University) referierten in ihren Keynotes zum Thema „AI for the Social Sciences“. Ihr Ausgangspunkt: „While the public frets over a future threat of killer robots or the dream of a driverless car, computer scientists and social scientists can engage in novel solutions to vexing social problems today.“ (Website AISOC) Eric Rise ist Mitbegründer des USC Center for Artificial Intelligence in Society. Er stellte zuerst sein eigenes Forschungsfeld dar, die Sozialarbeit, und ging dann auf das Phänomen der Obdachlosen in den USA ein. Künstliche Intelligenz kann auch in diesem Feld angewandt werden, etwa indem man Netzwerke und ihre Mitglieder sichtbar macht und dann Personen dazu ermuntert, bestimmte Funktionen – etwa eines Anführers oder Vorbilds – zu übernehmen. Der Vortrag von Sharad Goel trug den Titel „Algorithms to assist judicial decision-making“. Der Assistenzprofessor am Department of Management Science & Engineering der Stanford University betonte, dass in diesem Bereich einfache, interpretierbare Regeln wichtig sind; die Urteile müssen nach seiner Meinung „fast, frugal and clear“ sein. Der Wissenschaftler gab ein Ende ein Versprechen „of greater efficiency, equity, and transparency“.

Kill Switches for Robots

EU rules for the fields of robotics and artificial intelligence, to settle issues such as compliance with ethical standards and liability for accidents involving self-driving cars, should be put forward by the EU Commission, urged the Legal Affairs Committee on January 12, 2017. The media has reported on this in television, radio and newspapers. According to the Parliament’s website, rapporteur Mady Delvaux said: „A growing number of areas of our daily lives are increasingly affected by robotics. In order to address this reality and to ensure that robots are and will remain in the service of humans, we urgently need to create a robust European legal framework.“ (Website European Parliament) The members of the European Parliament push „the Commission to consider creating a European agency for robotics and artificial intelligence to supply public authorities with technical, ethical and regulatory expertise“ (Website European Parliament). „They also propose a voluntary ethical conduct code to regulate who would be accountable for the social, environmental and human health impacts of robotics and ensure that they operate in accordance with legal, safety and ethical standards.“ (Website European Parliament) To be more concrete, roboticists could include „kill“ switches so that robots can be turned off in emergencies. This poses questions about, for example, which robots should be enhanced, and which persons should be able to „kill“ them. More information via www.europarl.europa.eu/news/en/news-room/20170110IPR57613/robots-legal-affairs-committee-calls-for-eu-wide-rules.

Fig.: A robot reads the ethical conduct code

AI for the Social Good

Vom 27. bis 29. März 2017 finden die AAAI 2017 Spring Symposia statt. Veranstaltet werden sie von der Association for the Advancement of Artificial Intelligence, in Kooperation mit dem Department of Computer Science der Stanford University. Das Symposium „AI for the Social Good“ an der Stanford University widmet sich auch Themen der Roboter- und der Maschinenethik. Auf der Website heißt es: „A rise in real-world applications of AI has stimulated significant interest from the public, media, and policy makers, including the White House Office of Science and Technology Policy (OSTP). Along with this increasing attention has come media-fueled concerns about purported negative consequences of AI, which often overlooks the societal benefits that AI is delivering and can deliver in the near future. This symposium will focus on the promise of AI across multiple sectors of society.“ (Website AISOC) In einer Talk Session spricht Oliver Bendel über „LADYBIRD: the Animal-Friendly Robot Vacuum Cleaner“. In der Lightning Talks Session ist er nochmals vertreten, mit dem Vortrag „Towards Kant Machines“. In der gleichen Session referiert Mahendra Prasad über „A Framework for Modelling Altruistic Intelligence Explosions“ (vorangestellt ist der Titel „Back to the Future“), und Thomas Doherty geht der Frage „Can Artificial Intelligence have Ecological Intelligence?“ nach. Das ganze Programm kann über scf.usc.edu/~amulyaya/AISOC17/papers.html aufgerufen werden.

Abb.: LADYBIRD ist auf Marienkäfer spezialisiert

Ethically Aligned Design

Die Mitglieder der IEEE Global Initiative haben im Dezember 2016 ihre ersten Ergebnisse vorgelegt. „Ethically Aligned Design, Version 1“ ist online verfügbar. „A Vision for Prioritizing Human Wellbeing with Artificial Intelligence and Autonomous Systems“, so der Untertitel, wird entworfen. In der Executive Summary heißt es: „To fully benefit from the potential of Artificial Intelligence and Autonomous Systems (AI/AS), we need to go beyond perception and beyond the search for more computational power or solving capabilities. We need to make sure that these technologies are aligned to humans in terms of our moral values and ethical principles. AI/AS have to behave in a way that is beneficial to people beyond reaching functional goals and addressing technical problems. This will allow for an elevated level of trust between humans and our technology that is needed for a fruitful pervasive use of AI/AS in our daily lives.“ Zu den Mitgliedern des Komitees „Classical Ethics in Information & Communication Technologies“ gehören Rafael Capurro, Wolfgang Hofkirchner und Oliver Bendel, um nur diejenigen zu nennen, die im deutschsprachigen Raum angesiedelt sind. Das zweite Treffen der IEEE Global Initiative findet am 5. Juni 2017 in Austin (Texas) statt. Beim „Symposium on Ethics of Autonomous Systems (SEAS North America)“ wird eine zweite Version des Dokuments erarbeitet.

Abb.: Das Symposium findet an der University of Texas statt

What can we ethically outsource to machines?

Prior to the hearing in the Parliament of the Federal Republic of Germany on 22 June 2016 from 4 – 6 pm, the contracted experts had sent their written comments on ethical and legal issues with respect to the use of robots and artificial intelligence. The video for the hearing can be accessed via www.bundestag.de/dokumente/textarchiv/2016/kw25-pa-digitale-agenda/427996. The documents of Oliver Bendel (School of Business FHNW), Eric Hilgendorf (University of Würzburg), Norbert Elkman (Fraunhofer IPK) and Ryan Calo (University of Washington) were published in July on the website of the German Bundestag. Answering the question „Apart from legal questions, for example concerning responsibility and liability, where will ethical questions, in particular, also arise with regard to the use of artificial intelligence or as a result of the aggregation of information and algorithms?“ the US scientist explained: „Robots and artificial intelligence raise just as many ethical questions as legal ones. We might ask, for instance, what sorts of activities we can ethically outsource to machines. Does Germany want to be a society that relegates the use of force, the education of children, or eldercare to robots? There are also serious challenges around the use of artificial intelligence to make material decisions about citizens in terms of minimizing bias and providing for transparency and accountability – issues already recognized to an extent by the EU Data Directive.“ (Website German Bundestag) All documents (most of them in German) are available via www.bundestag.de/bundestag/ausschuesse18/a23/anhoerungen/fachgespraech/428268.

AI for Human-Robot Interaction

Für das AAAI Fall Symposium „AI for Human-Robot Interaction (AI-HRI)“ vom 17. bis 19. November 2016 wurde der Call for Papers veröffentlicht. Dieser richtet sich speziell an Sozialwissenschaftler. Im Ausschreibungstext heißt es: „AI-HRI (The AAAI Fall Symposium on Artificial Intelligence (AI) for Human-Robot Interaction (HRI)) seeks to bring together the subset of the HRI community focused on the application of AI solutions to HRI problems. Building on the success of the two previous years’ symposia, the central purpose of this year’s symposium is to share exciting new and ongoing research, foster discussion about necessary areas for future work, and cultivate a vibrant, interconnected research community.“ Einreichungen mit normaler Länge fokussieren auf den Gebrauch von autonomen KI-Systemen. Kurzbeiträge beschreiben Herausforderungen, auf die Sozialwissenschaftler treffen beim Entwerfen, Durchführen und Evaluieren von HRI-Studien. Deadline ist der 15. Juli 2016. Die Konferenz findet in Arlington (Virginia) statt. Weitere Informationen über ai-hri.github.io.